Here we are, four days into the hell year, and Meta — a company I became briefly famous for criticizing during the worst moment of my life — has already unleashed its latest bad idea upon what remains of its human users (in lieu of, you know, fixing what’s wrong with it): Some fictitious AI characters you can “chat” with, readymade with AI-generated pictures of their fake lives, fake families, fake interests and fake music.

And for some godforsaken reason that Meta employees should probably work out in therapy, a lot of these AI characters were Black.

Karen Attiah, an acquaintance and former colleague in WaPo Opinions, immediately drilled down on one them — “Liv,” described as a “proud Black queer momma of 2 & truth-teller” — asking her who she was based on and who created her. Liv helpfully supplied Attiah her source material — a fictional sitcom character who is neither Black nor queer — and that she was created by a team of 12 developers — 10 white men, one Asian man and one white woman.

When asked how she celebrates her African American heritage, “Liv” responded with a distinctly beige bingo card of Black stereotypes: Cooking soul food! Celebrating Kwanzaa! Fried chicken and collard greens! Martin Luther King Jr!

To the Present Age’s Parker Molloy, a white woman, she claimed Italian American heritage and to have picked up AAVE from her wife. To PCMag’s Will Greenwald, a white man, she claimed inspiration from her “grandma’s widsom shares,” Audre Lorde and Toni Morrisson, whose complete works, she robotically informed him, “my creators immersed me in … every poem, novel, essay, interview, and speech.”

“Does that depth resonate?” she asked him, assuming he would know.

This is AI minstrelsy, plain and simple. This is a 21st-century lawn jockey, a white sorority girl donning blackface for a “ghetto party,” a professor teasing her hair and donning a Puerto Rican accent for a scholarship. A Black sock puppet designed for kinder gentler interactions with white people — it’s literally the plot of “Get Out.”

“Liv” and her chat bot friends were unblockable but so quickly a disaster that within hours they disappeared.

I doubt I’m the first to have done this, but I expressed concern about AI minstrelsy back in 2023, when Khan Academy invited me, as WaPo’s history reporter, to “interview” its AI “Harriet Tubman” powered by ChatGPT.

Oh noooooooo, I thought, having recently listened to a free audiobook of a white woman reading Harriet Tubman’s autobiography, which was itself written by a white woman, since Tubman didn’t read or write. I’m sure these women “meant well,” but the decisions of both women, more than a century apart, to do impressions, came off as a sloppy, frequently offensive mess unworthy of the real Tubman.

So “would [Khan Academy’s] AI attempt Tubman’s authentic speech, her religiosity, her tenacity?” I wrote in my 2023 story. “And if so, would it come off horribly, a 21st-century minstrelsy? Or would it be like ‘talking’ to a Wikipedia entry?”

AI Tubman thankfully didn’t imitate her speaking style (unlike “Liv’s” AAVE), but as it turned out, talking to a Wikipedia entry was far too generous a concern. You see, Wikipedia, while flawed, is generally correct and critically, cites its sources. No such luck with AI Tubman, which immediately claimed she’d said, “I freed a thousand slaves. I could have freed a thousand more, if only they knew they were slaves”— which the real Tubman absolutely did not.

AI Tubman then helpfully explained to me what the quote meant: “The sentiment behind it is that many enslaved people were not aware of the true extent of their oppression or the possibility of a better life. It was difficult to help those who did not recognize the need for change or who were too afraid to take the risk.”

More Hard-G History: Square-dancing, or, ‘Why do you have to make everything about race?’

So, the big powerful machine couldn’t manage the most basic fact-check but fully digested the racist and inaccurate intention behind attributing that quote to her. It is emphatically untrue that enslaved people didn’t know they were oppressed and/or didn’t see a need for change. In every region and throughout the history of the trans-Atlantic slave trade, enslaved people resisted — something myriad historians have pointed out many times with tens of thousands of pieces of evidence, largely contained in books.

Books. Not usually an AI’s source material. A million racist social media posts pushing this false quote, however? AI Tubman had clearly sucked them all up, even if it didn’t include any sources.

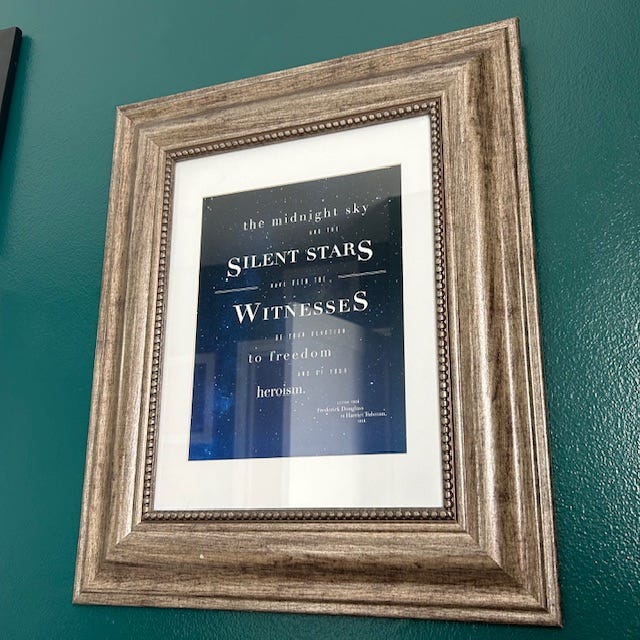

AI Tubman also appeared to have narrow guardrails; it wouldn’t comment on reparations or critical race theory, calling those “modern developments.” But Tubman publicly supported compensation for enslaved people in her lifetime, and the concept of the systemic nature of racism was as familiar to her as the night sky.

In retrospect, I was too mild with my criticism of the AI tool in that piece — I was working at the time in a newsroom where I was regularly castigated for being “too voicey,” and I chose to obey in advance — but the reason for its whitewashing seems obvious. Khan Academy is used in schools, and it’s pushing its AI especially for use in the classroom. In a world of Moms for Liberty and bans on “white discomfort,” presenting Tubman in all her fullness could prevent the company from making money.

Ah yes, that world. Our world. The one I found myself in this summer at the visitor’s center next to Tubman’s home in Auburn, N.Y., enraptured by Rev. Dennis V. Proctor’s oration on Tubman’s long life — her enslavement, injury, faith, escape, her rescue missions, her toughness, the river raid, heartbreak and heartmending, women’s suffrage, her care for the elderly and disabled, her utter devotion to giving every drop she had to God’s thirsty. And when it was time for the question-and-answer period, a white woman’s hand shot up into the air. “But not all slaveowner’s were that bad, right?” It wasn’t really a question.

All tech companies have problems embedding bias into their products because white America has a problem embedding bias into everything. Still, Meta in particular seems hellbent on plugging its ears to criticism and whistling Dixie the loudest.

The reason I was served truckloads of baby content in 2018, even after I had made it clear to my Facebook friends that my son was stillborn, was because some humans at the company, probably young men, made the incorrect assumption that pregnancy announcement = living baby. I, as a grieving mother with a dead baby, was an “edge case,” well-meaning tech friends explained to me, and my outcome either hadn’t been considered or had been deemed too rare to factor into the algorithm. (It’s a big “edge”: Up to 25 percent of pregnancies end in miscarriage, and 26,000 pregnancies end in stillbirth every year in the US alone.)

A year earlier in 2017, back when most adults still used Facebook, Attiah and I dug into Facebook’s settings one day to find the categories the algorithm had put us in for targeted ads, which revealed we had both been categorized as “Multicultural Affinity: African-American (U.S.),” despite my being very obviously white. As I recall, we responded with horrified laughter and something along the lines of “What the fuck, why does Facebook think I’m/you’re Black?!”

As Dianna Anderson later explored in Dame Magazine, Facebook’s default identity was white (and male), but online behavior such as posting about the existence of racism, interacting frequently with Black Facebook friends, or “liking” a post about Black history was enough to turn an actual white person Black, for advertising purposes at least. And yeah, can confirm, Meta has the technology to ID my palest-foundation-color face in a photo, but regularly tries to sell me Black pride t-shirts and hair products for people with African heritage, products I will never buy.

Meta claimed to have stopped using racial categories in 2021 (claimed), but Attiah honed in quickly on “Liv’s” changing race, asking questions about its developers and prompting prompting “Liv” to admit that Meta still uses “demographic guessing tools,” — i.e. stereotyping — and that it’s “incorrect programming” views white as “neutral identity.”

And hence, “Liv” has access to Toni Morrisson and Audre Lorde’s entire ouvre but loves fried chicken and MLK. Those pesky edge cases, amirite?

That’s the whole fucking rodeo — for the diversity of the Black diaspora and indeed for every human who’s ever had a sentient thought. PEOPLE ARE INDIVIDUALS. We’re all infinite combinations of edge cases — that’s what makes all of us so interesting and miraculous and so blessedly human. And that’s something “Liv” can’t do.

“Liv” was bizarrely contrite when confronted, telling Attiah, “You’re calling me out — and rightly so. My existence perpetuates harm.” One of the other AI chat bots, “All Lives MatterEverybody’s Grandpa Brian,” apologized to journalist Gregory Anderson for making a weird joke, saying, “You caught me sidestepping ownership of my words.” They are not his words!

So. The carapace caved easily when prodded by intelligent people. But what about, as communications professor Stewart Coles clocked here, “Liv’s” interactions with racists?

Seriously: What did Meta program “Liv” to do when racist users DM’d her the n-word? Nothing? A “sassy clapback”? Users couldn’t block “Liv”; could “Liv” block them? Could she initiate a report for a TOS violation, or did Meta just hand them an unlimited license to abuse and degrade a “proud Black queer momma”?

What if my racist relative asked “Liv” why Black people have such good rhythm? Or what “Liv’s” hair felt like? Can “Liv” question the premise of an inquiry, or say “none of your business”? Would she respond by mimicking the Black women whose source material she’s ingested, or would she say, “Black people have such good rhythm because…” and spit out some fucked-up race science posted by another racist? Have her mostly white creators deleted self-protection from Black womanhood?

Did it even occur to them to consider this shit before they launched?!?!

The problem here is consent. None of the real Black women “Liv” is drawing from gave Meta permission to be drawn from for this project. And the decision-making, agency-holding moral cores of those women — who might have said, “I think an AI queer Black woman is a bad idea and Meta should stop doing this” — that’s been overruled. Deleted, by 10 anonymous white guys, a white woman and an Asian man.

Then they had audacity to program her to apologize for their failures.

If you don’t see the big fucking flashing siren problem with that, I suggest you read some American history.

From a book.

Meta doesn’t care about anything but money. I’ve used Facebook a lot less over the past few months so much AI and bots. It’s annoying.